Section: New Results

Understanding user gestures for touch screen-based 3D User Interfaces

Participants : Aurélie Cohé, Martin Hachet.

In the scope of the ANR project Instinct, we studied how users tend to interact with a touchscreen for interacting with 3D content. Our main contributions were to study user behaviors with a standard touchscreen on the one hand, and with a pressure sensitive touchscreen on the other hand.

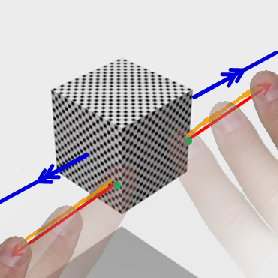

Multi-touch interfaces have emerged with the widespread use of smartphones. Although a lot of people interact with 2D applications through touchscreens, interaction with 3D applications remains little explored. Most 3D object manipulation techniques have been created by designers who have generally put users aside from the design creation process. We conducted a user study to better understand how non-technical users tend to interact with a 3D object from touchscreen inputs. The experiment has been conducted while users were manipulating a 3D cube with three viewpoints for rotations, scaling and translations (RST). Sixteen users participated and 432 gestures were analyzed. To classify data, we introduce a taxonomy for 3D manipulation gestures with touchscreens. Then, we identify a set of strategies employed by users to perform the proposed cube transformations. Our findings suggest that each participant uses several strategies with a predominant one. Furthermore, we conducted a study to compare touchscreen and mouse interaction for 3D object manipulations. The results suggest that gestures are different according to the device, and touchscreens are preferred for the proposed tasks. Finally, we propose some guidelines to help designers in the creation of more user friendly tools. This work was published in the Graphics Interface (GI) conference [12] as well as in the Computers and Graphics journal [6] .

Moreover, few works have focused on the relation between the manipulated data and the quantity of force applied with the fingers sliding on a touch sensor. In another work, we conducted two user studies to better understand how users manage to control pressure, and how they tend to use this input modality. A first set of experiments allows us to characterize pressure in relation to finger motions. Based on the results of this study, we designed a second set of experiments focusing on the completion of 3D manipulation tasks from 2D gestures. The results indicate that a strong relationship exists between the actions the participants intend to perform, and the quantity of force they apply for 3D object manipulations. This finding opens new promising perspectives to enhance user interfaces dedicated to force-based touch sensors.

All these works were published in the PhD thesis of Aurélie Cohé [4] , which was defended on December 13th, 2012.

|