Section: New Results

Computer-aided image manipulation

Local texture-based color transfer and colorization

Participants : Benoit Arbelot, Romain Vergne, Thomas Hurtut, Joëlle Thollot.

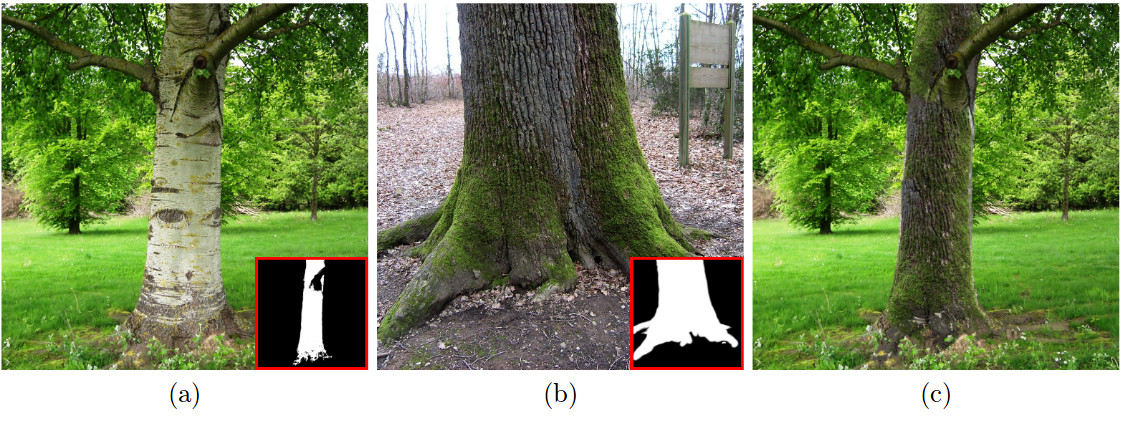

This work targets two related color manipulation problems: Color transfer for modifying an image's colors and colorization for adding colors to a grayscale image. Automatic methods for these two applications propose to modify the input image using a reference that contains the desired colors. Previous approaches usually do not target both applications and suffer from two main limitations: possible misleading associations between input and reference regions and poor spatial coherence around image structures. In this paper, we propose a unified framework that uses the textural content of the images to guide the color transfer and colorization. Our method introduces an edge-aware texture descriptor based on region covariance, allowing for local color transformations. We show that our approach is able to produce results comparable or better than state-of-the-art methods in both applications (see Figure 4). This work is an extended version of an Expressive' 2016 paper and was published in the C&G journal [1].

|

Texture Transfer Based on Texture Descriptor Variations

Participants : Benoit Arbelot, Romain Vergne, Thomas Hurtut, Joëlle Thollot.

|

We tackle the problem of image-space texture transfer which aims to modify an object or surface material by replacing its input texture by another reference texture. The main challenge of texture transfer is to successfully reproduce the reference texture patterns while preserving the input texture variations due to its environment such as illumination or shape variations. We propose to use a texture descriptor composed of local luminance and local gradients orientation and magnitude to characterize the input texture variations. We then introduce a guided texture synthesis algorithm to synthesize a texture resembling the reference texture with the input texture variations. The main contribution of our algorithm is its ability to locally deform the reference texture according to local texture descriptors in order to better reproduce the input texture variations. We show that our approach is able to produce results comparable with current state-of-the-art approaches but with fewer user inputs. Preliminary results of this work are shown in a research report [14].