Section: New Results

Illumination Simulation and Materials

Point-Based Rendering for Homogeneous Participating Media with Refractive Boundaries

Participants : Beibei Wang, Nicolas Holzschuch.

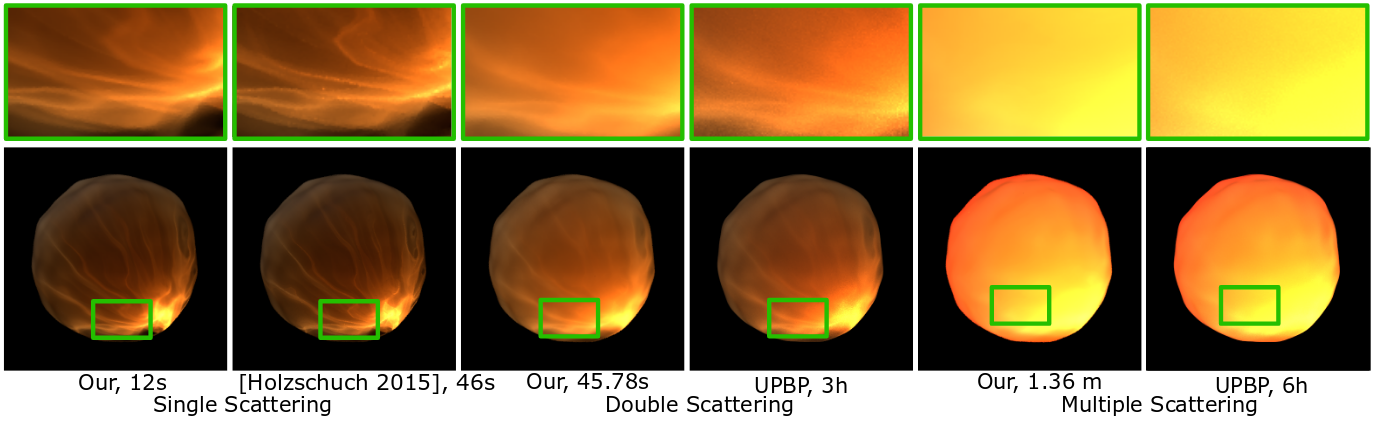

Illumination effects in translucent materials are a combination of several physical phenomena: refraction at the surface, absorption and scattering inside the material. Because refraction can focus light deep inside the material, where it will be scattered, practical illumination simulation inside translucent materials is difficult. We present an a Point-Based Global Illumination method for light transport on homogeneous translucent materials with refractive boundaries. We start by placing light samples inside the translucent material and organizing them into a spatial hierarchy. At rendering, we gather light from these samples for each camera ray. We compute separately the sample contributions for single, double and multiple scattering, and add them. We present two implementations of our algorithm: an offline version for high-quality rendering and an interactive GPU implementation. The offline version provides significant speed-ups and reduced memory footprints compared to state-of-the-art algorithms, with no visible impact on quality (Figure 6). The GPU version yields interactive frame rates: 30 fps when moving the viewpoint, 25 fps when editing the light position or the material parameters. This work has been published in IEEE Transactions on Visualization and Computer Graphics [7].

|

A Two-Scale Microfacet Reflectance Model Combining Reflection and Diffraction

Participants : Nicolas Holzschuch, Romain Pacanowski.

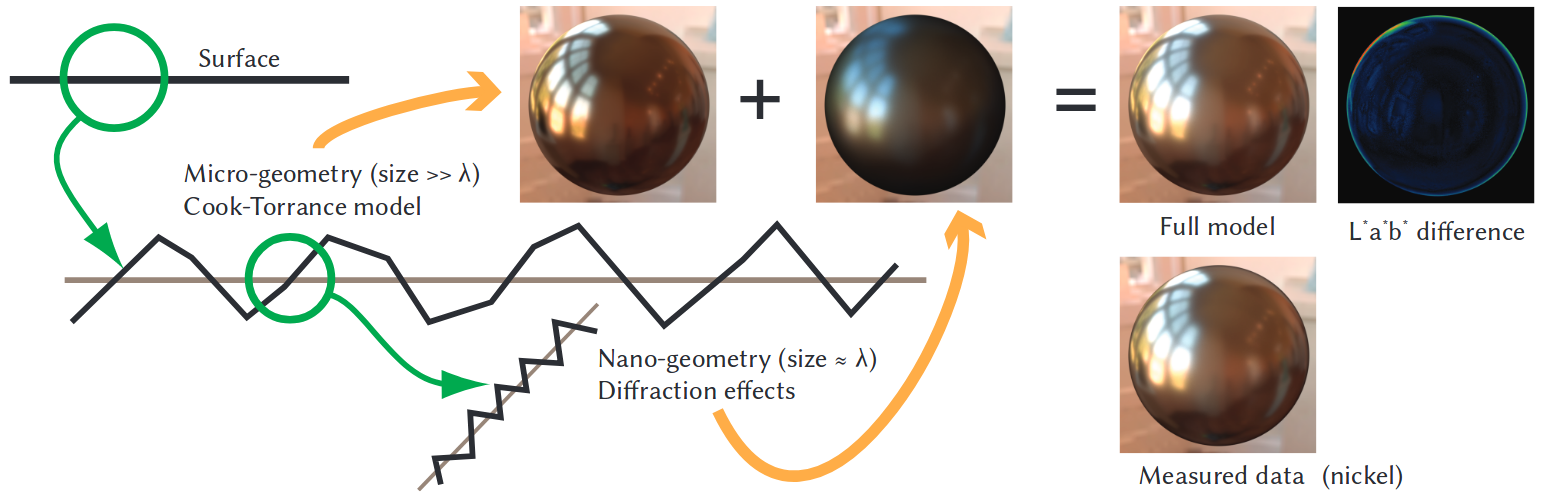

Adequate reflectance models are essential for the production of photorealistic images. Microfacet reflectance models predict the appearance of a material at the macroscopic level based on microscopic surface details. They provide a good match with measured reflectance in some cases, but not always. This discrepancy between the behavior predicted by microfacet models and the observed behavior has puzzled researchers for a long time. In this work, we show that diffraction effects in the micro-geometry provide a plausible explanation. We describe a two-scale reflectance model, separating between geometry details much larger than wavelength and those of size comparable to wavelength. The former model results in the standard Cook-Torrance model. The latter model is responsible for diffraction effects. Diffraction effects at the smaller scale are convolved by the micro-geometry normal distribution. The resulting two-scale model provides a very good approximation to measured reflectances (Figure 7). This work has been published in TOG [2] and presented at SIGGRAPH 2017. It was also presented at the “tout sur les BRDF” day in Poitier (France) [17].

|

Precomputed Multiple Scattering for Light Simulation in Participating Medium

Participants : Beibei Wang, Nicolas Holzschuch.

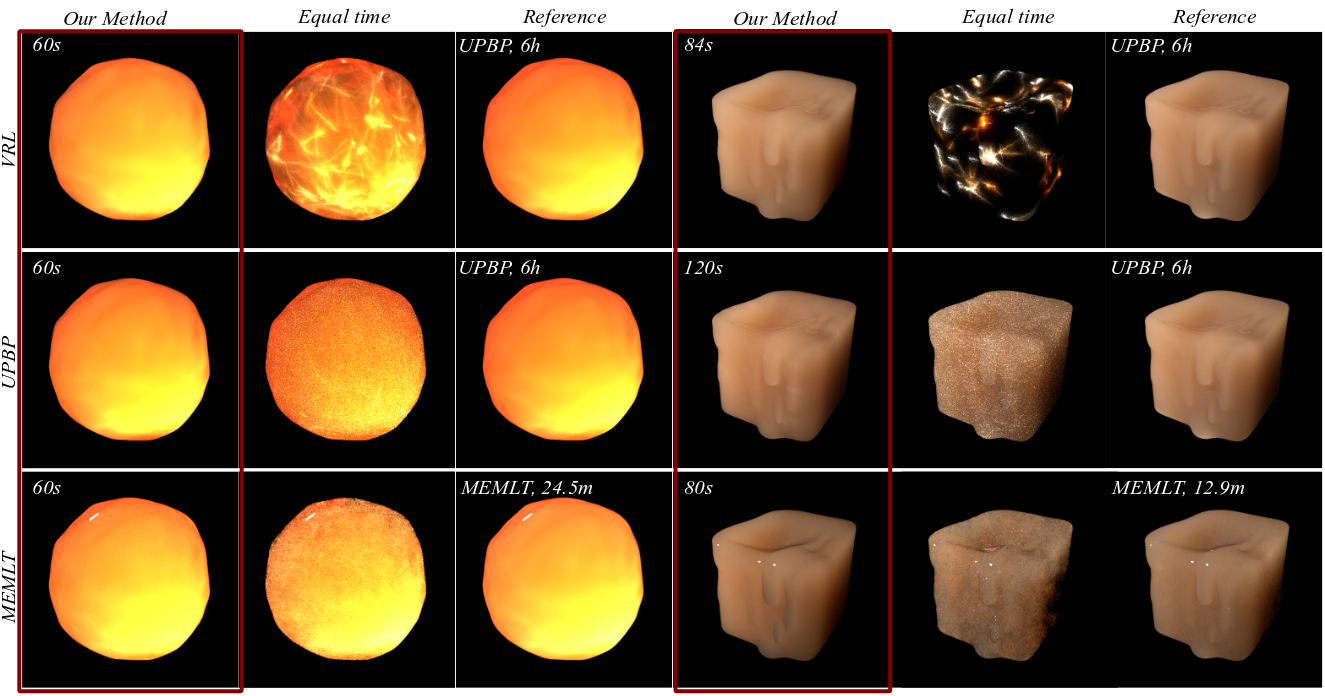

Illumination simulation involving participating media is computationally intensive. The overall aspect of the material depends on simulating a large number of scattering events inside the material. Combined, the contributions of these scattering events are a smooth illumination. Computing them using ray-tracing or photon-mapping algorithms is expensive: convergence time is high, and pictures before convergence are low quality. In this work, we precompute the result of multiple scattering events, assuming an infinite medium, and store it in two 4D tables. These precomputed tables can be used with many rendering algorithms, such as Virtual Ray Lights (VRL), Unified Point Beams and Paths (UPBP) or Manifold Exploration Metropolis Light Transport (MEMLT), greatly reducing the convergence time (Figure 8). The original algorithm takes care of low order scattering (single and double scattering), while our precomputations are used for multiple scattering (more than two scattering events). This work was presented at SIGGRAPH [12].

|

The Effects of Digital Cameras Optics and Electronics for Material Acquisition

Participants : Nicolas Holzschuch, Romain Pacanowski.

For material acquisition, we use digital cameras and process the pictures. We usually treat the cameras as perfect pinhole cameras, with each pixel providing a point sample of the incoming signal. In this work, we study the impact of camera optical and electronic systems. Optical system effects are modelled by the Modulation Transfer Function (MTF). Electronic System effects are modelled by the Pixel Response Function (PRF). The former is convolved with the incoming signal, the latter is multiplied with it. We provide a model for both effects, and study their impact on the measured signal. For high frequency incoming signals, the convolution results in a significant decrease in measured intensity, especially at grazing angles. We show this model explains the strange behaviour observed in the MERL BRDF database at grazing angles. This work has been presented at the Workshop on Material Appearance Modeling [8].

A Versatile Parameterization of Measured Material Manifolds

Participants : Cyril Soler, Kartic Subr, Derek Nowrouzezahrai.

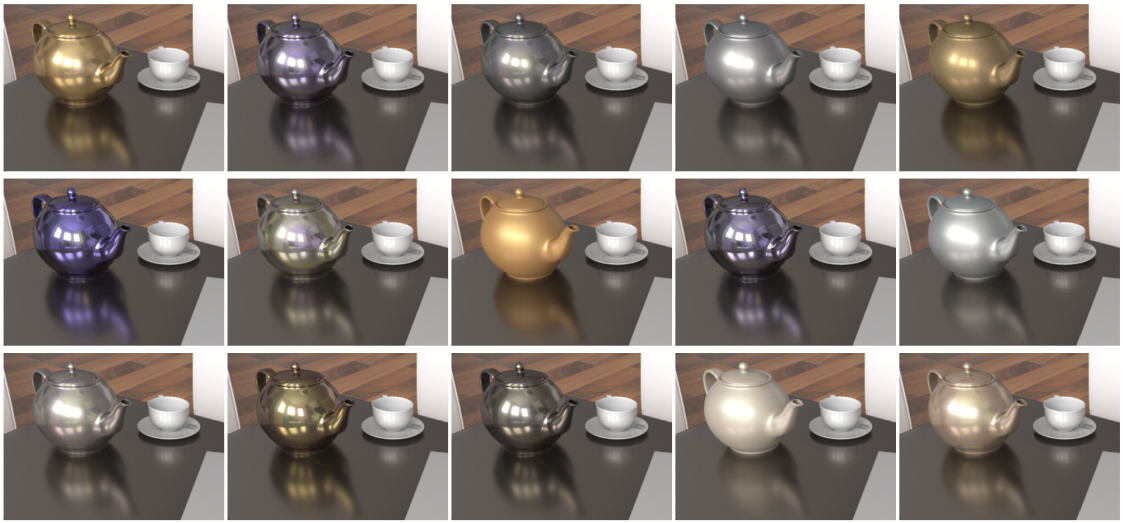

A popular approach for computing photorealistic images of virtual objects requires applying reflectance profiles measured from real surfaces, introducing several challenges: the memory needed to faithfully capture realistic material reflectance is large, the choice of materials is limited to the set of measurements, and image synthesis using the measured data is costly. Typically, this data is either compressed by projecting it onto a subset of its linear principal components or by applying non-linear methods. The former requires prohibitively large numbers of components to faithfully represent the input reflectance, whereas the latter necessitates costly algorithms to extrapolate reflectance data. We learn an underlying, low-dimensional non-linear reflectance manifold amenable to the rapid exploration and rendering of real-world materials. We show that interpolated materials can be expressed as linear combinations of the measured data, despite lying on an inherently non-linear manifold. This allows us to efficiently interpolate and extrapolate measured BRDFs, and to render directly from the manifold representation. To do so, we rely on a Gaussian process latent variable model of reflectance. We demonstrate the utility of our representation in the context of both high-performance and offline rendering with materials that interpolated from real-world captured BRDFs. This work has been accepted for publication at the Eurographics 2018 conference.

|