Section: New Results

Human-Computer Partnerships

Participants : Wendy Mackay [correspondant] , Baptiste Caramiaux, Téo Sanchez, Marianela Ciolfi Felice, Carla Griggio, Shu Yuan Hsueh, Wanyu Liu, John Maccallum, Nolwenn Maudet, Joanna Mcgrenere, Midas Nouwens, Andrew Webb.

ExSitu is interested in designing effective human-computer partnerships, in which expert users control their interaction with technology. Rather than treating the human users as the 'input' to a computer algorithm, we explore human-centered machine learning, where the goal is to use machine learning and other techniques to increase human capabilities. Much of human-computer interaction research focuses on measuring and improving productivity: our specific goal is to create what we call 'co-adaptive systems' that are discoverable, appropriable and expressive for the user.

|

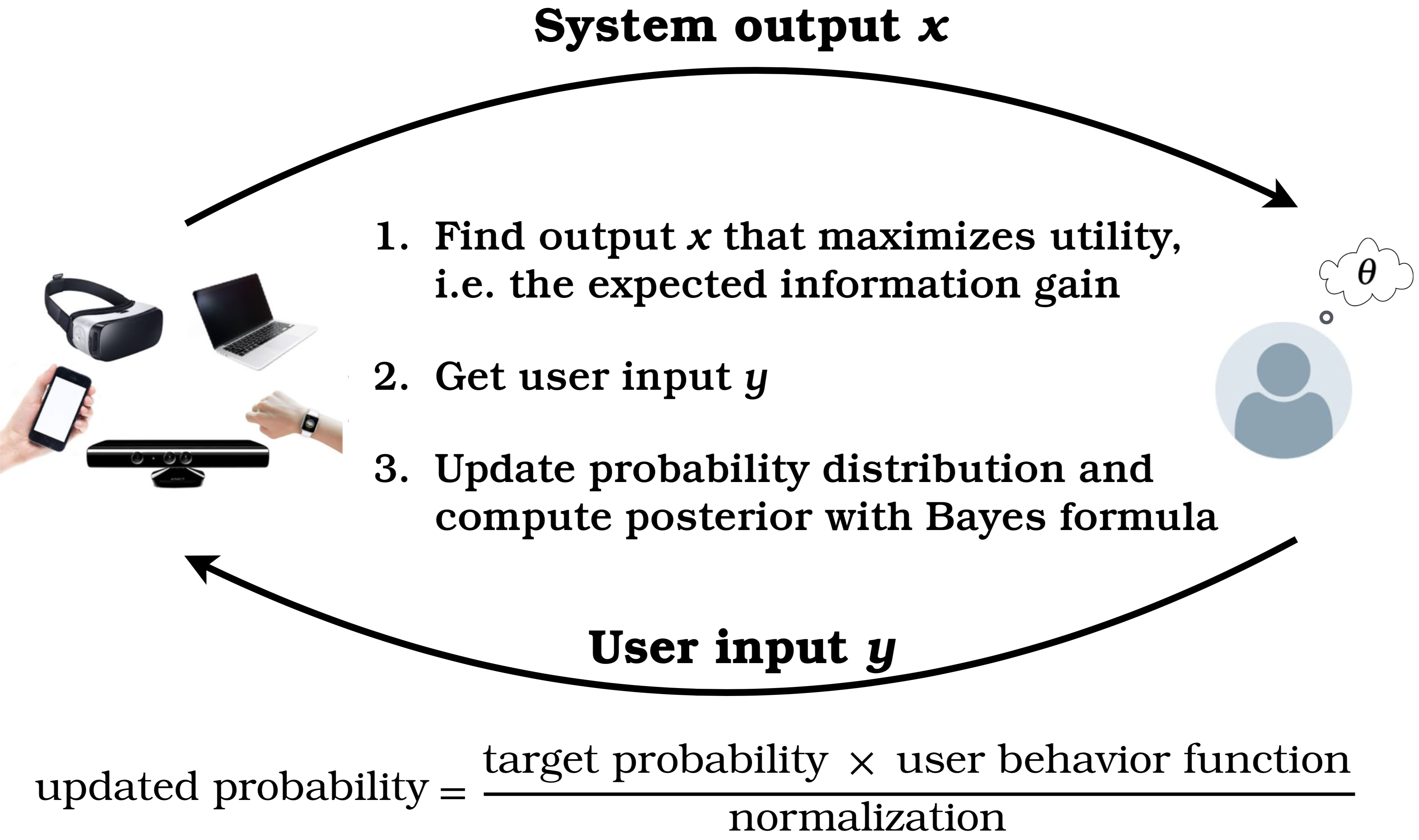

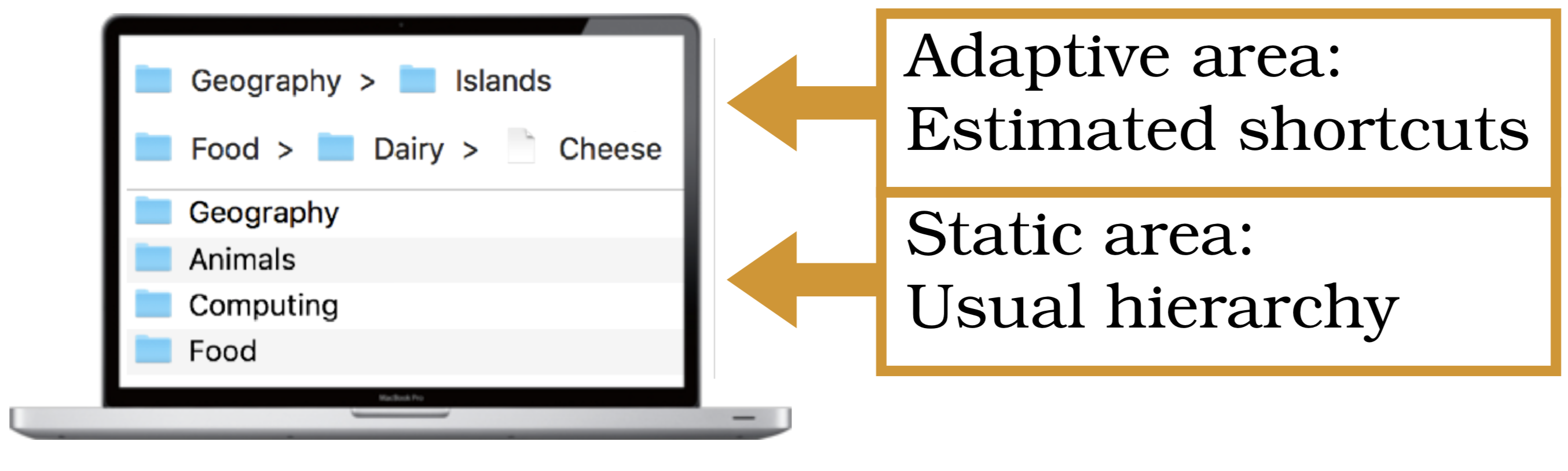

The Bayesian Information Gain (BIG) project uses Bayesian Experimental Design, where the criterion is to maximize the information-theoretic concept of mutual information, also known as information gain (fig. 4-left). The resulting interactive system “runs experiments” on the user in order to maximize the information gain from the user's next input and get to the user's goal more efficiently. BIGnav applies BIG to multiscale navigation [7]. Rather than simply executing the navigation commands issued by the user, BIGnav interprets them to update its knowledge about the user’s intended target, and then computes a new view that maximizes the expected information gain provided by the user’s next input. This view is located such that, from the system's perspective, the possible navigation commands are uniformly probable, to the extent possible. BIGFile [22] (ACM CHI Honorable Mention award) uses a similar approach for file navigation, with a split interface (fig. 4-right) that combines a classical area where users can navigate the file system as usual and an adaptive area with a set of shortcuts calculated with BIG. BIGnav and BIGFile create a novel form of human-computer partnership, where the computer challenges the user in order to extract more information from the user's input, making interaction more efficient. We showed that both techniques are significantly faster (40% and more) than conventional navigation techniques. Wanyu Liu, supervised by Michel Beaudouin-Lafon, successfully defended her Ph.D. thesis Information theory as a unified tool for understanding and designing human-computer interaction [35] on this topic.

In the area of visualization, we studied the common challenge faced by domain experts when identifying and comparing patterns in time series data. While automatic measures exist to compute time series similarity, human intervention is often required to visually inspect these automatically generated results. In collaboration with the ILDA Inria team and Univ. Paris-Descartes, we studied how different visualization techniques affect similarity perception in EEG signals [12], [31]. Our goal was to understand if the time series results returned from automatic similarity measures are perceived in a similar manner, irrespective of the visualization technique; and if what people perceive as similar with each visualization aligns with different automatic measures and their similarity constraints. Overall, our work indicates that the choice of visualization affects which temporal patterns we consider to be similar, i.e., the notion of similarity in a time series is not visualization independent. This demonstrates the need for effective human-computer partnerships in which the computer complements, rather than replaces, human skills and expertise.

We began to explore human-centred machine learning, which takes advantage of active machine learning to facilitate personalization of an interactive system. We developed a gesture-based recognition system where the user iteratively provides instances and also answers the system's queries. Our results demonstrated the phenomenon of co-adaptation between the human user and the system, which challenges the state of the art in conventional active learning. We further explored interactive reinforcement learning as a way to explore high-dimensional parametric space efficiently [24].