Section: New Results

Creativity

Participants : Sarah Fdili Alaoui [correspondant] , Marianela Ciolfi Felice, Carla Griggio, Shu Yuan Hsueh, Germán Leiva, John Maccallum, Wendy Mackay, Baptiste Caramiaux, Nolwenn Maudet, Joanna Mcgrenere, Midas Nouwens, Jean-Philippe Rivière, Nicolas Taffin, Philip Tchernavskij, Theophanis Tsandilas, Andrew Webb, Michael Wessely.

ExSitu is interested in understanding the work practices of creative professionals, particularly artists, designers, and scientists, who push the limits of interactive technology. We follow a multi-disciplinary participatory design approach, working with both expert and non-expert users in diverse creative contexts. We also create situations that cause users to reflect deeply on their activities in situ and collaborate to articulate new design problems.

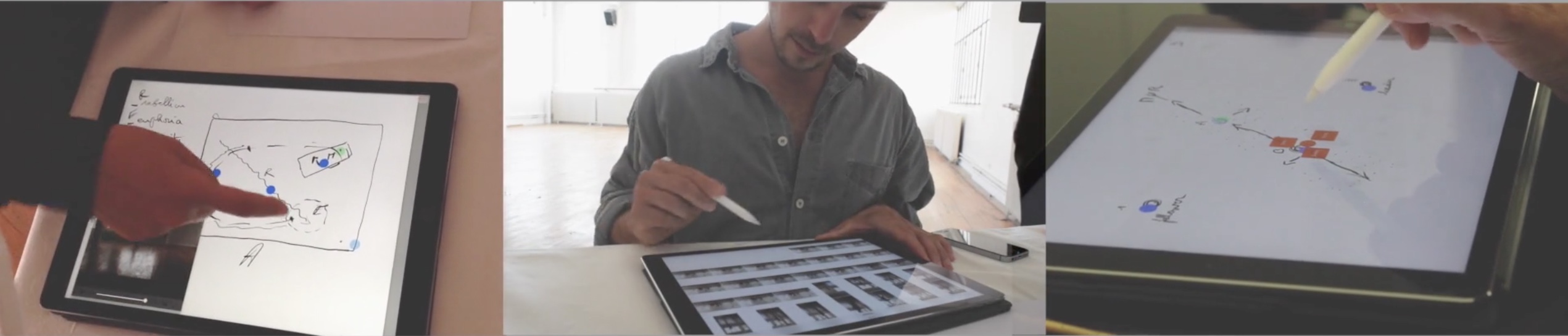

We identified diverse strategies for recording choreographic fragments and, influenced by the concept of information substrates, designed Knotation [19], a mobile pen-based tool where choreographers sketch representations of their choreographic ideas and make them interactive (Figure 5). Subsequent studies showed that Knotation supports both dance-then-record and record-then-dance strategies. Marianela Ciolfi Felice, supervised by Wendy Mackay and Sarah Fdili Alaoui, successfully defended her Ph.D. thesis Supporting Expert Creative Practice on this topic [32].

|

We are also developing a Choreographer’s Workbench, a full-body interactive system that helps choreographers explore dance movements by linking previously recorded movement ideas and revealing their underlying relationships. The system emphasizes discoverability and appropriation of movement ideas, using feedforward to visualize movement characteristics. We studied how dancers learn complex expressive movements [23], and studied how variability during practice affects learning motor and timing skills [11]. We contributed to soma-based design, i.e. movement-based designs and design practices specifically engaging with aesthetics [13]. We also collaborated with Ircam on a tool that uses reinforcement learning to explore high-dimensional sound spaces [24]. Users enter likes and dislikes to guide navigation within the sound space, shifting from a parameter-based to a reward-based exploration strategy.

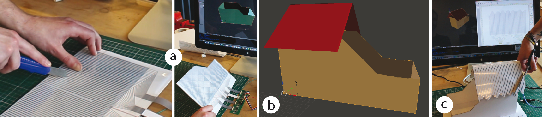

We also are interested in how makers transition between physical and digital designs. Makers often create both physical and digital prototypes to explore a design, taking advantage of the subtle feel of physical materials and the precision and power of digital models. We developed ShapeMe [25], a novel smart material that captures its own geometry as it is physically cut by an artist or designer. ShapeMe includes a software toolkit that lets its users generate customized, embeddable sensors that can accommodate various object shapes. As the designer works on a physical prototype, the toolkit streams the artist’s physical changes to its digital counterpart in a 3D CAD environment (Figure 6). We used a rapid, inexpensive and simple-to-manufacture inkjet printing technique to create embedded sensors. We successfully created a linear predictive model of the sensors’ lengths, and our empirical tests of ShapeMe showed an average accuracy of 2 to 3 mm. We further presented an application scenario for modeling multi-object constructions, such as architectural models, and 3D models consisting of multiple layers stacked one on top of each other.

|

We also presented Interactive Tangrami [29], a method for prototyping interactive physical interfaces from functional paper-folded building blocks (Tangramis). Interactive Tangrami can contain various sensor input and visual output capabilities. Our digital design tool lets makers design the shape and interactive behavior of custom user interfaces. The software manages the communication with the paper-folded blocks and streams the interaction data via the Open Sound protocol (OSC) to an application prototyping environment, such as MaxMSP. The building blocks are fabricated digitally with a rapid and inexpensive ink-jet printing method. Our systems allows to prototype physical user interfaces within minutes and without knowledge of the underlying technologies. Finally, we continued our work with Saarland University, TU Berlin and MIT on digitally fabricated directional screens [15]. Michael Wessely, supervised by Theophanis Tsandilas and Wendy Mackay, successfully defended his Ph.D. thesis Fabricating Malleable Interaction-Aware Material [36] on these topics.