Section: New Results

Computer-Assisted Design with Heterogeneous Representations

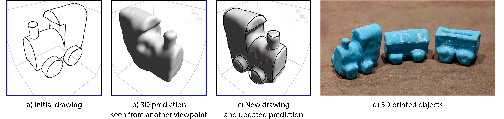

3D Sketching using Multi-View Deep Volumetric Prediction

Participants : Johanna Delanoy, Adrien Bousseau.

Drawing is the most direct way for people to express their visual thoughts. However, while humans are extremely good are perceiving 3D objects from line drawings, this task remains very challenging for computers as many 3D shapes can yield the same drawing. Existing sketch-based 3D modeling systems rely on heuristics to reconstruct simple shapes, require extensive user interaction, or exploit specific drawing techniques and shape priors. Our goal is to lift these restrictions and offer a minimal interface to quickly model general 3D shapes with contour drawings . While our approach can produce approximate 3D shapes from a single drawing, it achieves its full potential once integrated into an interactive modeling system, which allows users to visualize the shape and refine it by drawing from several viewpoints (Figure 4). At the core of our approach is a deep convolutional neural network (CNN) that processes a line drawing to predict occupancy in a voxel grid. The use of deep learning results in a flexible and robust 3D reconstruction engine that allows us to treat sketchy bitmap drawings without requiring complex, hand-crafted optimizations. While similar architectures have been proposed in the computer vision community, our originality is to extend this architecture to a multiview context by training an updater network that iteratively refines the prediction as novel drawings are provided.

This work is a collaboration with Mathieu Aubry from Ecole des Ponts ParisTech and Alexei Efros and Philip Isola from UC Berkeley. The work was published in Proceedings of the ACM on Computer Graphics and Interactive Techniques and presented at the ACM SIGGRAPH I3D Symposium on Interactive Computer Graphics and Games [12].

|

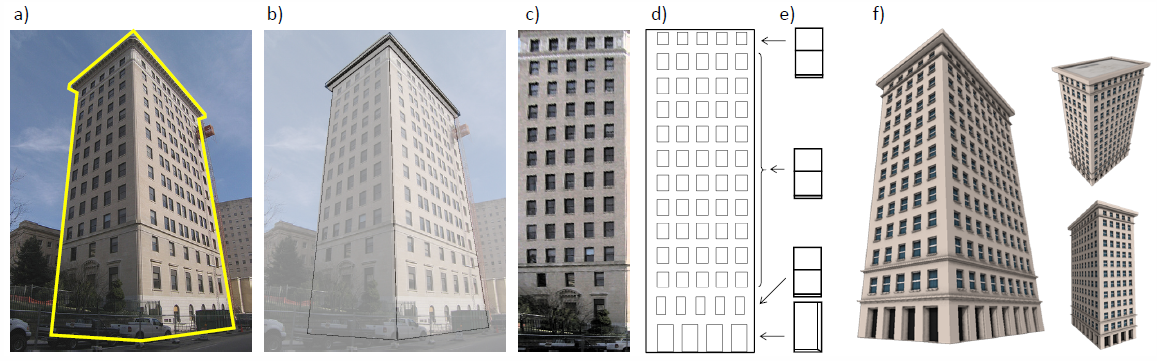

Procedural Modeling of a Building from a Single Image

Participant : Adrien Bousseau.

Creating a virtual city is demanded for computer games, movies, and urban planning, but it takes a lot of time to create numerous 3D building models. Procedural modeling has become popular in recent years to overcome this issue, but creating a grammar to get a desired output is difficult and time consuming even for expert users. In this paper, we present an interactive tool that allows users to automatically generate such a grammar from a single image of a building. The user selects a photograph and highlights the silhouette of the target building as input to our method. Our pipeline automatically generates the building components, from large-scale building mass to fine-scale windows and doors geometry. Each stage of our pipeline combines convolutional neural networks (CNNs) and optimization to select and parameterize procedural grammars that reproduce the building elements of the picture. In the first stage, our method jointly estimates camera parameters and building mass shape. Once known, the building mass enables the rectification of the facades, which are given as input to the second stage that recovers the facade layout. This layout allows us to extract individual windows and doors that are subsequently fed to the last stage of the pipeline that selects procedural grammars for windows and doors. Finally, the grammars are combined to generate a complete procedural building as output. We devise a common methodology to make each stage of this pipeline tractable. This methodology consists in simplifying the input image to match the visual appearance of synthetic training data, and in using optimization to refine the parameters estimated by CNNs. We used our method to generate a variety of procedural models of buildings from existing photographs.

The work was published in Computer Graphics Forum, presented at Eurographics 2018 [15].

|

OpenSketch: A Richly-Annotated Dataset of Product Design Sketches

Participants : Yulia Gryaditskaya, Frédéric Durand, Adrien Bousseau.

We collected a dataset of more than 400 product design sketches, representing 12 man-made objects drawn from two different view points by 7 to 15 product designers of varying expertise. Together with industrial design teachers, we distilled a taxonomy of the methods designers use to accurately sketch in perspective and used it to label each stroke of the 214 sketches drawn from one of the two viewpoints. We registered each sketch to its reference 3D model by annotating sparse correspondences. We made an analysis of our annotated sketches, which reveals systematic drawing strategies over time and shapes. We also developed several applications of our dataset for sketch-based modeling and sketch filtering. We will distribute our dataset under the Creative Commons CC0 license to foster research in digital sketching.

This work is a collaboration with Mark Sypesteyn, Jan Willem Hoftijzer and Sylvia Pont from TU Delft, Netherlands. It is currently under review.

Line Drawing Vectorization using a Global Parameterization

Participants : Tibor Stanko, Adrien Bousseau.

Despite the progress made in recent years, automatic vectorization of line drawings remains a difficult task. For drawings containing noise, holes and oversketched strokes, the main challenges are the correct classification of curve junctions, filling the missing information, and clustering multiple strokes corresponding to a single curve. We propose a new line drawing vectorization method, which addresses the above challenges in a global manner. Inspired by the quad meshing literature, we compute a global parametrization of the input drawing, such that nearby strokes are mapped to a single straight line in the parametric domain, while junctions are mapped to straight line intersections. The vectorization is obtained by following the straight lines in the parametric domain, and mapping them back to the original space. This allows us to process both clean and sketchy drawings.

This work is an ongoing collaboration with David Bommes from University of Bern, Mikhail Bessmeltsev from University of Montreal, and Justin Solomon from MIT.

Image-Space Motion Rigidification for Video Stylization

Participants : Johanna Delanoy, Adrien Bousseau.

Existing video stylization methods often retain the 3D motion of the original video, making the result look like a 3D scene covered in paint rather than the 2D painting of a scene. In contrast, traditional hand-drawn animations often exhibit simplified in-plane motion, such as in the case of cut-out animations where the animator moves pieces of paper from frame to frame. Inspired by this technique, we propose to modify a video such that its content undergoes 2D rigid transforms. To achieve this goal, our approach applies motion segmentation and optimization to best approximate the input optical flow with piecewise-rigid transforms, and re-renders the video such that its content follows the simplified motion. The output of our method is a new video and its optical flow, which can be fed to any existing video stylization algorithm.

This work is a collaboration with Aaron Hertzmann from Adobe Research. It is currently under review.

Computational Design of Tensile Structures

Participants : David Jourdan, Adrien Bousseau.

Tensile structures are architectural shapes made of stretched elastic material that can be used to create large-span roofs. Their elastic properties make it quite challenging to obtain a specific shape, and the final shape of a tensile structure is usually found rather than imposed. We created a design tool for tensile structures that, unlike existing software, lets the user specify the shape they want and finds the closest fit.

This work is an ongoing collaboration with Melina Skouras from IMAGINE (Inria Rhone Alpes). A preliminary version was presented at JFIG (Journées Françaises d'Informatique Graphique) 2018.