Section: New Results

Motion saliency in videos

Participants : Léo Maczyta, Patrick Bouthemy.

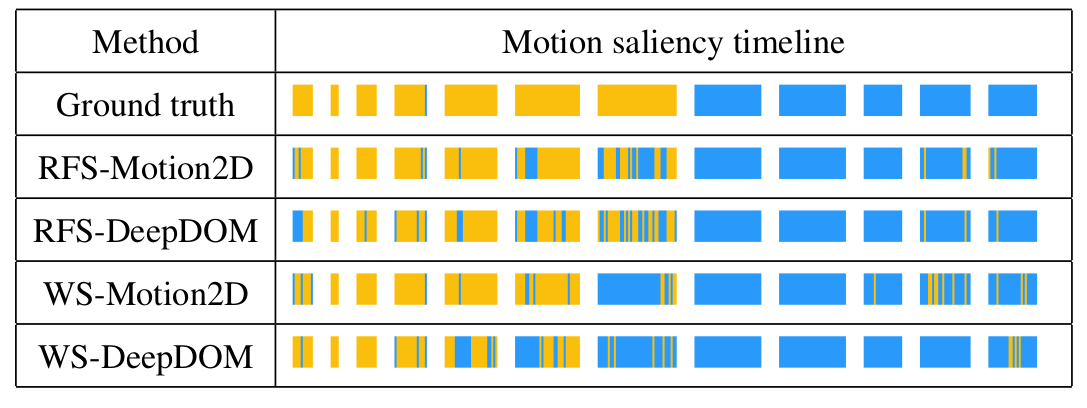

The problem we have addressed appertains to the domain of motion saliency in videos. More specifically, we aim to extract the temporal segments of the video where motion saliency is present. It is a prerequisite for computing motion saliency maps in relevant images. It turns out to be a frame classification problem. A frame is classified as dynamically salient if it contains local motion departing from its context. Temporal motion saliency detection is relevant for applications where one needs to trigger alerts or to monitor dynamic behaviors from videos. The proposed approach handles situations with a mobile camera, and involves a deep learning classification framework after camera motion compensation. We have designed and compared two methods respectively based on image warping, and on residual flow. A baseline that relies on a two-stream network to process temporal and spatial information, but that does not use camera motion compensation, was also defined. Experiments on real videos demonstrate that we can obtain an accurate classification in highly challenging situations, and get significant improvement over the baseline. In particular, we showed that the compensation of the camera motion produces a better classification. We also showed that for the limited training data available, providing the residual flow as input to the classification network produces better results than providing the warped images.

Collaborators: Olivier Le Meur (Percept team, Irisa).

|