Section: New Results

Computer-Assisted Design with Heterogeneous Representations

|

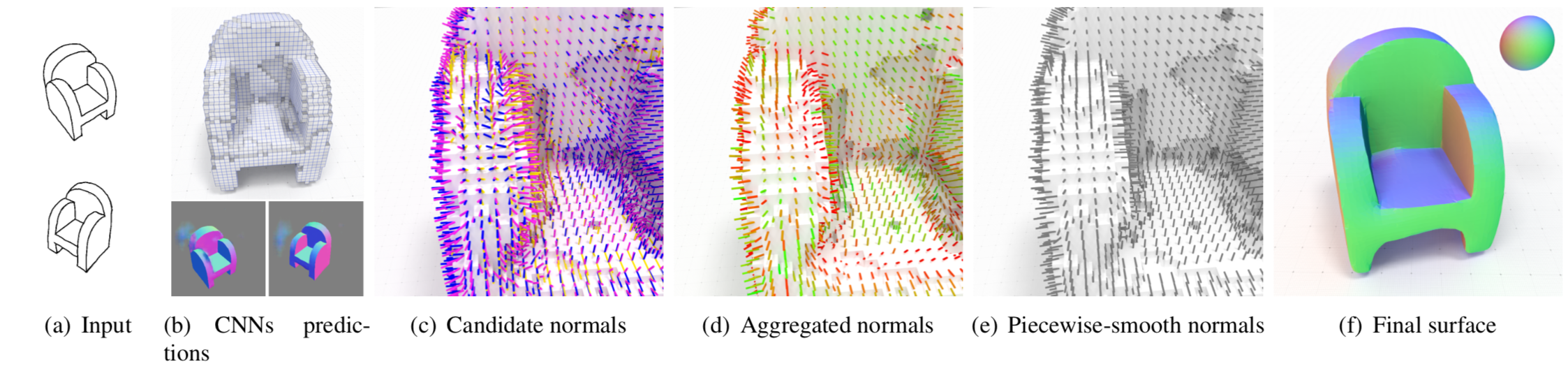

Combining Voxel and Normal Predictions for Multi-View 3D Sketching

Participants : Johanna Delanoy, Adrien Bousseau.

Recent works on data-driven sketch-based modeling use either voxel grids or normal/depth maps as geometric representations compatible with convolutional neural networks. While voxel grids can represent complete objects – including parts not visible in the sketches – their memory consumption restricts them to low-resolution predictions. In contrast, a single normal or depth map can capture fine details, but multiple maps from different viewpoints need to be predicted and fused to produce a closed surface. We propose to combine these two representations to address their respective shortcomings in the context of a multi-view sketch-based modeling system. Our method predicts a voxel grid common to all the input sketches, along with one normal map per sketch. We then use the voxel grid as a support for normal map fusion by optimizing its extracted surface such that it is consistent with the re-projected normals, while being as piecewise-smooth as possible overall (Fig. 4). We compare our method with a recent voxel prediction system, demonstrating improved recovery of sharp features over a variety of man-made objects.

This work is a collaboration with David Coeurjolly from Université de Lyon and Jacques-Olivier Lachaud from Université Savoie Mont Blanc. The work was published in the journal Computer & Graphics and presented at the SMI conference [14].

|

Video Motion Stylization by 2D Rigidification

Participants : Johanna Delanoy, Adrien Bousseau.

We introduce a video stylization method that increases the apparent rigidity of motion. Existing stylization methods often retain the 3D motion of the original video, making the result look like a 3D scene covered in paint rather than a 2D painting of a scene. In contrast, traditional hand-drawn animations often exhibit simplified in-plane motion, such as in the case of cut-out animations where the animator moves pieces of paper from frame to frame. Inspired by this technique, we propose to modify a video such that its content undergoes 2D rigid transforms (Fig. 5). To achieve this goal, our approach applies motion segmentation and optimization to best approximate the input optical flow with piecewise-rigid transforms, and re-renders the video such that its content follows the simplified motion. The output of our method is a new video and its optical flow, which can be fed to any existing video stylization algorithm.

This work is a collaboration with Aaron Hertzmann from Adobe Research. It was presented at the ACM/EG Expressive Symposium [21].

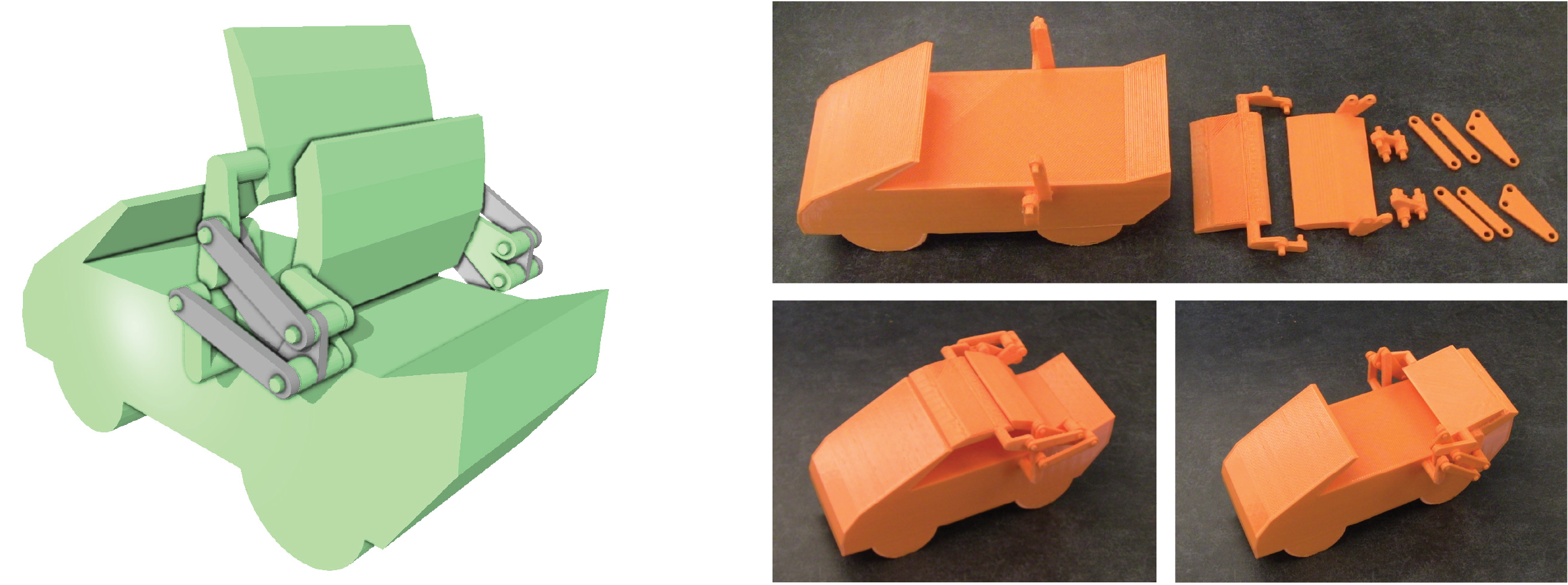

Multi-Pose Interactive Linkage Design

Participant : Adrien Bousseau.

We introduce an interactive tool for novice users to design mechanical objects made of 2.5D linkages. Users simply draw the shape of the object and a few key poses of its multiple moving parts. Our approach automatically generates a one-degree-of-freedom linkage that connects the fixed and moving parts, such that the moving parts traverse all input poses in order without any collision with the fixed and other moving parts. In addition, our approach avoids common linkage defects and favors compact linkages and smooth motion trajectories. Finally, our system automatically generates the 3D geometry of the object and its links, allowing the rapid creation of a physical mockup of the designed object (Fig. 6).

|

This work was conducted in collaboration with Gen Nishida and Daniel G. Aliaga from Purdue University, was published in Computer Graphics Forum and presented at the Eurographics conference [18].

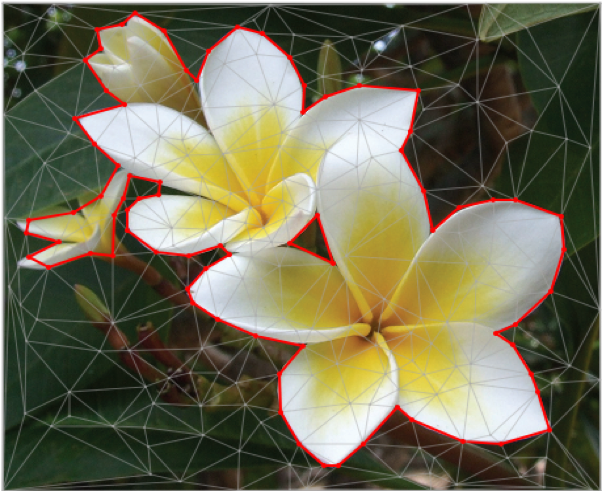

Extracting Geometric Structures in Images with Delaunay Point Processes

Participant : Adrien Bousseau.

We introduce Delaunay Point Processes, a framework for the extraction of geometric structures from images. Our approach simultaneously locates and groups geometric primitives (line segments, triangles) to form extended structures (line networks, polygons) for a variety of image analysis tasks. Similarly to traditional point processes, our approach uses Markov Chain Monte Carlo to minimize an energy that balances fidelity to the input image data with geometric priors on the output structures. However, while existing point processes struggle to model structures composed of inter-connected components, we propose to embed the point process into a Delaunay triangulation, which provides high-quality connectivity by construction. We further leverage key properties of the Delaunay triangulation to devise a fast Markov Chain Monte Carlo sampler. We demonstrate the flexibility of our approach on a variety of applications, including line network extraction, object contouring, and mesh-based image compression (see Fig. 7).

|

This work was conducted in collaboration with Jean-Dominique Favreau and Florent Lafarge (TITANE group), and published in IEEE PAMI [16].

Integer-Grid Sketch Vectorization

Participants : Tibor Stanko, Adrien Bousseau.

A major challenge in line drawing vectorization is segmenting the input bitmap into separate curves. This segmentation is especially problematic for rough sketches, where curves are depicted using multiple overdrawn strokes. Inspired by feature-aligned mesh quadrangulation methods in geometry processing, we propose to extract vector curve networks by parametrizing the image with local drawing-aligned integer grids. The regular structure of the grid facilitates the extraction of clean line junctions; due to the grid's discrete nature, nearby strokes are implicitly grouped together. Our method successfully vectorizes both clean and rough line drawings, whereas previous methods focused on only one of those drawing types.

This work is an ongoing collaboration with David Bommes from University of Bern and Mikhail Bessmeltsev from University of Montreal. It is currently under review.

Surfacing Sparse Unorganized 3D Curves using Global Parametrization

Participants : Tibor Stanko, Adrien Bousseau.

Designers use sketching to quickly externalize ideas, often using a handful of curves to express complex shapes. Recent years have brought a plethora of new tools for creating designs directly in 3D. The output of these tools is often a set of sparse, unorganized curves. We propose a novel method for automatic conversion of such unorganized curves into clean curve networks ready for surfacing. The core of our method is a global curve-aligned parametrization, which allows us to automatically aggregate information from neighboring curves and produce an output with valid topology.

This work is an ongoing collaboration with David Bommes from University of Bern, Mikhail Bessmeltsev from University of Montreal, and Justin Solomon from MIT.

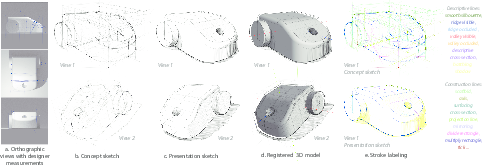

OpenSketch: A Richly-Annotated Dataset of Product Design Sketches

Participants : Yulia Gryaditskaya, Adrien Bousseau, Fredo Durand.

|

Product designers extensively use sketches to create and communicate 3D shapes and thus form an ideal audience for sketch-based modeling, non-photorealistic rendering and sketch filtering. However, sketching requires significant expertise and time, making design sketches a scarce resource for the research community. We introduce OpenSketch, a dataset of product design sketches aimed at offering a rich source of information for a variety of computer-aided design tasks. OpenSketch contains more than 400 sketches representing 12 man-made objects drawn by 7 to 15 product designers of varying expertise. We provided participants with front, side and top views of these objects (Fig. 8a), and instructed them to draw from two novel perspective viewpoints(Fig. 8b). This drawing task forces designers to construct the shape from their mental vision rather than directly copy what they see. They achieve this task by employing a variety of sketching techniques and methods not observed in prior datasets. Together with industrial design teachers, we distilled a taxonomy of line types and used it to label each stroke of the 214 sketches drawn from one of the two viewpoints (Fig. 8e). While some of these lines have long been known in computer graphics, others remain to be reproduced algorithmically or exploited for shape inference. In addition, we also asked participants to produce clean presentation drawings from each of their sketches, resulting in aligned pairs of drawings of different styles (Fig. 8c). Finally, we registered each sketch to its reference 3D model by annotating sparse correspondences (Fig. 8d). We provide an analysis of our annotated sketches, which reveals systematic drawing strategies over time and shapes, as well as a positive correlation between presence of construction lines and accuracy. Our sketches, in combination with provided annotations, form challenging benchmarks for existing algorithms as well as a great source of inspiration for future developments. We illustrate the versatility of our data by using it to test a 3D reconstruction deep network trained on synthetic drawings, as well as to train a filtering network to convert concept sketches into presentation drawings. We distribute our dataset under the Creative Commons CC0 license: https://ns.inria.fr/d3/OpenSketch.

This work is a collaboration with Mark Sypesteyn, Jan Willem Hoftijzer and Sylvia Pont from TU Delft, Netherlands. This work was published at ACM Transactions on Graphics, and presented at SIGGRAPH Asia 2019 [17].

Intersection vs. Occlusion: a Discrete Formulation of Line Drawing 3D Reconstruction

Participants : Yulia Gryaditskaya, Adrien Bousseau, Felix Hähnlein.

The popularity of sketches in design stems from their ability to communicate complex 3D shapes with a handful of lines. Yet, this economy of means also makes sketch interpretation a challenging task, as global 3D understanding needs to emerge from scattered pen strokes. To tackle this challenge, many prior methods cast 3D reconstruction of line drawings as a global optimization that seeks to satisfy a number of geometric criteria, including orthogonality, planarity, symmetry. However, all of these methods require users to distinguish line intersections that exist in 3D from the ones that are only due to occlusions. These user annotations are critical to the success of existing algorithms, since mistakenly treating an occlusion as a true intersection would connect distant parts of the shape, with dramatic consequences on the overall optimization procedure. We propose a line drawing 3D reconstruction method that automatically discriminates 3D intersections from occlusions. This automation not only reduces user burden, it also allows our method to scale to real-world sketches composed of hundreds of pen strokes, for which the number of intersections is too high to make existing user-assisted methods practical. Our key idea is to associate each 2D intersection with a binary variable that indicates if the intersection should be preserved in 3D. Our algorithm then searches for the assignment of binary values that yields the best 3D shape, as measured with similar criteria as the ones used by prior work for 3D reconstruction. However, the combinatorial nature of this binary assignment problem prevents trying all possible configurations. Our main technical contribution is an efficient search algorithm that leverages principles of how product designers draw to reconstruct complex 3D drawings within minutes.

This work is a collaboration with Alla Sheffer (Professor at University of British Columbia) and Chenxi Liu (PhD student at University of British Columbia).

Data-driven sketch segmentation

Participants : Yulia Gryaditskaya, Felix Hähnlein, Adrien Bousseau.

Deep learning achieves impressive performance on image segmentation, which has motivated the recent development of deep neural networks for the related task of sketch segmentation, where the goal is to assign labels to the different strokes that compose a line drawing. However, while natural images are well represented as bitmaps, line drawings can also be represented as vector graphics, such as point sequences and point clouds. In addition to offering different trade-offs on resolution and storage, vector representations often come with additional information, such as stroke ordering and speed.

In this project, we evaluate three crucial design choices for sketch segmentation using deep-learning: which sketch representation to use, which information to encode in this representation, and which loss function to optimize. Our findings suggest that point clouds represent a competitive alternative to bitmaps for sketch segmentation, and that providing extra-geometric information improves performance.

Stroke-based concept sketch generation

Participants : Felix Hähnlein, Yulia Gryaditskaya, Adrien Bousseau.

State-of-the-art non-photorealisting rendering algorithms can generate lines representing salient visual features on objects. However, very few methods exist for generating lines outside of an object, as is the case for most construction lines, used in technical drawings and design sketches. Furthermore, most methods do not generate human-like strokes and do not consider the drawing order of a sketch.

In this project, we address these issues by proposing a reinforcement learning framework, where a virtual agent tries to generate a construction sketch of a given 3D model. One key element of our approach is the study and the mathematical formalization of drawing strategies used by industrial designers.

Designing Programmable, Self-Actuated Structures

Participants : David Jourdan, Adrien Bousseau.

Self-actuated structures are material assemblies that can deform from an initially simpler state to a more complex, curved one, by automatically deforming to shape. Most relevant to applications in manufacturing are self-actuated shapes that are fabricated flat, considerably reducing the cost and complexity of manufacturing curved 3D surfaces. While there are many ways to design self-actuated materials (e.g. using heat or water as actuation mechanisms), we use 3D printing to embed rigid patterns into prestressed fabric, which is then released and assumes a shape matching a given target when reaching static equilibrium.

While using a 3D printer to embed plastic curves into prestressed fabric is a technique that has been experimented on before, it has been mostly restricted to piecewise minimal surfaces, making it impossible to reproduce most shapes. By using a dense packing of 3-pointed stars, we are able to create convex shapes and positive gaussian curvature, moreover we found a direct link between the stars dimensions and the induced curvature, allowing us to build an inverse design tool that can faithfully reproduce some target shapes.

This is a collaboration with Mélina Skouras of Inria Rhône Alpes and Etienne Vouga of the University of Texas at Austin.