Section: New Results

Graphics with Uncertainty and Heterogeneous Content

Multi-view relighting using a geometry-aware network

Participants : Julien Philip, George Drettakis.

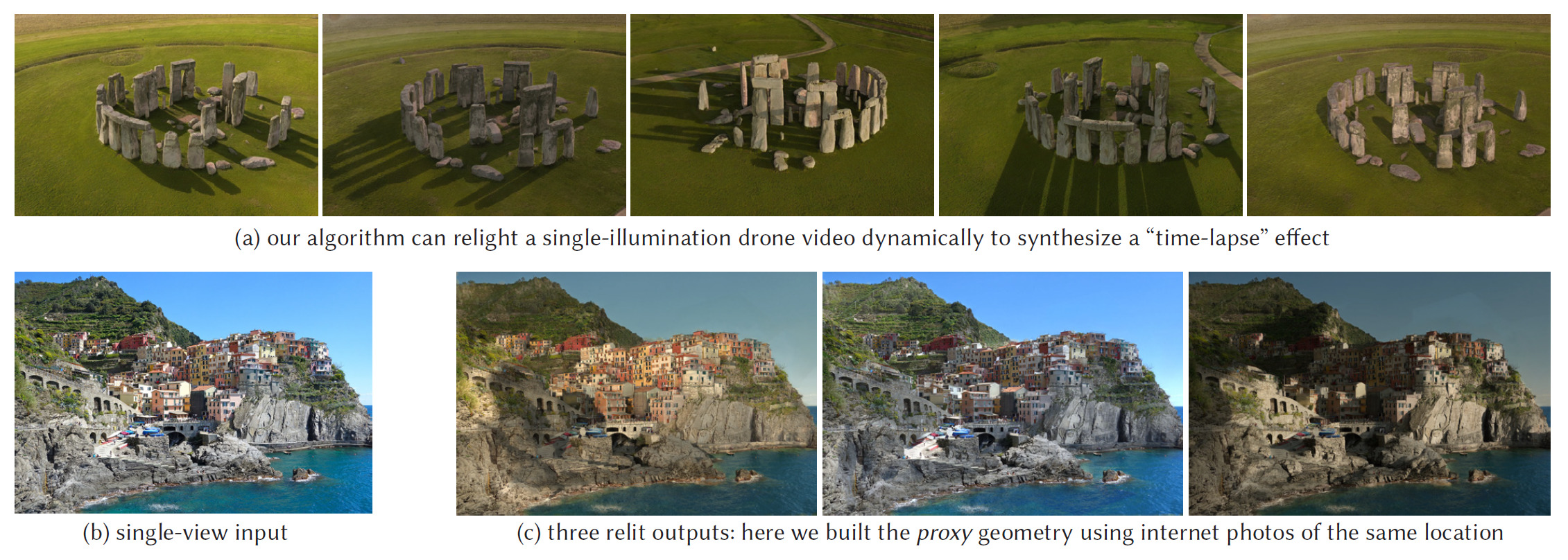

We propose the first learning-based algorithm that can relight images in a plausible and controllable manner given multiple views of an outdoor scene. In particular, we introduce a geometry-aware neural network that utilizes multiple geometry cues (normal maps, specular direction, etc.) and source and target shadow masks computed from a noisy proxy geometry obtained by multi-view stereo. Our model is a three-stage pipeline: two subnetworks refine the source and target shadow masks, and a third performs the final relighting. Furthermore, we introduce a novel representation for the shadow masks, which we call RGB shadow images. They reproject the colors from all views into the shadowed pixels and enable our network to cope with inacuraccies in the proxy and the non-locality of the shadow casting interactions. Acquiring large-scale multi-view relighting datasets for real scenes is challenging, so we train our network on photorealistic synthetic data. At train time, we also compute a noisy stereo-based geometric proxy, this time from the synthetic renderings. This allows us to bridge the gap between the real and synthetic domains. Our model generalizes well to real scenes. It can alter the illumination of drone footage, image-based renderings, textured mesh reconstructions, and even internet photo collections (see Fig. 9).

This work was in collaboration with M. Gharbi of Adobe Research and A. Efros and T. Zhang of UC Berkeley, and was published in ACM Transactions on Graphics and presented at SIGGRAPH 2019 [19].

Flexible SVBRDF Capture with a Multi-Image Deep Network

Participants : Valentin Deschaintre, Frédo Durand, George Drettakis, Adrien Bousseau.

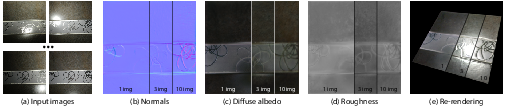

Empowered by deep learning, recent methods for material capture can estimate a spatially-varying reflectance from a single photograph. Such lightweight capture is in stark contrast with the tens or hundreds of pictures required by traditional optimization-based approaches. However, a single image is often simply not enough to observe the rich appearance of real-world materials. We present a deep-learning method capable of estimating material appearance from a variable number of uncalibrated and unordered pictures captured with a handheld camera and flash. Thanks to an order-independent fusing layer, this architecture extracts the most useful information from each picture, while benefiting from strong priors learned from data. The method can handle both view and light direction variation without calibration. We show how our method improves its prediction with the number of input pictures, and reaches high quality reconstructions with as little as 1 to 10 images – a sweet spot between existing single-image and complex multi-image approaches – see Fig. 10.

|

This work is a collaboration with Miika Aittala from MIT CSAIL. This work was published in Computer Graphics Forum, and presented at EGSR 2019 [15].

A short paper and poster summarizing this work together with our 2018 "Single-Image SVBRDF Capture with a Rendering-Aware Deep Network" was published in the Siggraph Asia doctoral consortium 2019 [22].

Guided Acquisition of SVBRDFs

Participants : Valentin Deschaintre, George Drettakis, Adrien Bousseau.

Another project is under development to capture a large-scale SVBRDF from a few pictures of a planar surface. Many existing lightweight methods for SVBRDF capture take as input flash pictures, which need to be acquired close to the surface of interest restricting the scale of capture. We complement such small-scale inputs with a picture of the entire surface, taken under ambient lighting. Our method then fuses these two sources of information to propagate the SVBRDFs estimated from each close-up flash picture to all pixels of the large image. Thanks to our two-scale approach, we can capture surfaces several meters wide, such as walls, doors and furniture. In addition, our method can also be used to create large SVBRDFs from internet pictures, where we use artist-designed SVBRDFs as exemplars of the small-scale behavior of the surface.

Mixed rendering and relighting for indoor scenes

Participants : Julien Philip, Michaël Gharbi, George Drettakis.

We are investigating a mixed image rendering and relighting method that allows a user to move freely in a multi-view interior scene while altering its lighting. Our method uses a deep convolutional network trained on synthetic photo-realistic images. We adapt classical path tracing techniques to approximate complex lighting effects such as color bleeding and reflections.

DiCE: Dichoptic Contrast Enhancement for VR and Stereo Displays

Participant : George Drettakis.

In stereoscopic displays, such as those used in VR/AR headsets, our eyes are presented with two different views. The disparity between the views is typically used to convey depth cues, but it could be also used to enhance image appearance. We devise a novel technique that takes advantage of binocular fusion to boost perceived local contrast and visual quality of images. Since the technique is based on fixed tone curves, it has negligible computational cost and it is well suited for real-time applications, such as VR rendering. To control the trade-of between contrast gain and binocular rivalry, we conducted a series of experiments to explain the factors that dominate rivalry perception in a dichoptic presentation where two images of different contrasts are displayed (see Fig. 11). With this new finding, we can effectively enhance contrast and control rivalry in mono- and stereoscopic images, and in VR rendering, as conirmed in validation experiments.

|

This work was in collaboration with Durham University (G. Koulieris, past postdoc of the group), Cambridge (F. Zhong, R. Mantiuk), UC Berkeley (M. Banks) and ENS Renne (M. Chambe), and was published in ACM Transactions on Graphics and presented at SIGGRAPH Asia 2019 [20].

Compositing Real Scenes using a relighting Network

Participants : Baptiste Nicolet, Julien Philip, George Drettakis.

Image-Based Rendering (IBR) allows for fast rendering of photorealistic novel viewpoints of real-world scenes captured by photographs. While it facilitates the very tedious traditional content creation process, it lacks user control over the appearance of the scene. We propose a novel approach to create novel scenes from a composition of multiple IBR scenes. This method relies on the use of a relighting network, which we first use to match the lighting conditions of each scene, and then to synthesize shadows between scenes in the final composition. This work has been submitted for publication.

Image-based Rendering of Urban Scenes based on Semantic Information

Participants : Simon Rodriguez, Siddhant Prakash, George Drettakis.

Cityscapes exhibit many hard cases for image-based rendering techniques, such as reflective and transparent surfaces. Pre-existing information about the scene can be leveraged to tackle these difficult cases. By relying on semantic information, it is possible to address those regions with tailored algorithms to improve reconstruction and rendering. This project is a collaboration with Peter Hedman from University College of London. This work has been submitted for publication.

Synthetic Data for Image-based Rendering

Participants : Simon Rodriguez, Thomas Leimkühler, George Drettakis.

This project explores the potential of Image-based rendering techniques in the context of real-time rendering for synthetic scenes. Accurate information can be precomputed from the input synthetic scene and used at run-time to improve the quality of approximate global illumination effects while preserving performance. This project is a collaboration with Chris Wyman and Peter Shirley from NVIDIA Research.

Densified Surface Light Fields for Human Capture Video

Participants : Rada Deeb, George Drettakis.

In this project, we focus on video-based rendering for mid-scale platforms. Having a mid-scale platform introduces one important problem for image-based rendering techniques due to low angular resolution. This leads to unrealistic view-dependent effects. We propose to use the temporal domain in a multidimensional surface light field approach in order to enhance the angular resolution. In addition, our approach provides a compact representation essential to dealing with the large amount of data introduced by videos compared to image-based techniques. In addition, we evaluate the use of deep encoder-decoder networks to learn a more compact representation of our multidimensional surface light field. This work is in collaboration with Edmond Boyer, MORPHEO team, Inria Grenoble.

Deep Bayesian Image-based Rendering

Participants : Thomas Leimkühler, George Drettakis.

Deep learning has permeated the field of computer graphics and continues to be instrumental in producing state-of-the-art research results. In the context of image-based rendering, deep architectures are now routinely used for tasks such as blending weight prediction, view extrapolation, or re-lighting. Current algorithms, however, do not take into account the different sources of uncertainty arising from the several stages of the image-based rendering pipeline. In this project, we investigate the use of Bayesian deep learning models to estimate and exploit these uncertainties. We are interested in devising principled methods which combine the expressive power of modern deep learning with the well-groundedness of classical Bayesian models.

Path Guiding for Metropolis Light Transport

Participants : Stavros Diolatzis, George Drettakis.

Path guiding has been proven to be an effective way to achieve faster convergence in Monte Carlo renderings by learning the incident radiance field. However, current path guiding techniques could be beaten by unguided path tracing due to their overhead or inability to incorporate the BSDF distribution factor. In our work, we improve path guiding and Metropolis light transport algorithms with low overhead product sampling between the incoming radiance and BSDF values. We demonstrate that our method has better convergence compared to the previous state-of-the-art techniques. Moreover, combining path guiding with MLT solves the global exploration issues ensuring convergence to the stationary distribution.

This work is an ongoing collaboration with Wenzel Jakob from Ecole Polytechnique Fédérale de Lausanne and Adrien Gruson from McGill University.

Improved Image-Based Rendering with Uncontrolled Capture

Participants : Siddhant Prakash, George Drettakis.

Current state-of-the-art Image Based Rendering (IBR) algorithms, such as Deep Blending, use per-view geometry to render candidate views and machine learning to improve rendering of novel views. The casual capture process employed introduces in visible color artifacts during rendering due to automated camera settings, and incur significant computational overhead when using per-view meshes. We aim to find a global solution to harmonize color inconsistency across the entire set of images in a given dataset, and also improve the performance of IBR algorithms by limiting the use of more advanced techniques only to regions where they are required.

Practical video-based rendering of dynamic stationary environments

Participants : Théo Thonat, George Drettakis.

The goal of this work is to extend traditional Image Based Rendering to capture subtle motions in real scenes. We want to allow free-viewpoint navigation with casual capture, such as a user taking photos and videos with a single smartphone and a tripod. We focus on stochastic time-dependent textures such as waves, flames or waterfalls. We have developed a video representation able to tackle the challenge of blending unsynchronized videos.

This work is a collaboration with Sylvain Paris from Adobe Research, Miika Aittala from MIT CSAIL, and Yagiz Aksoy from ETH Zurich, and has been submitted for publication.