Section: Software

Learning Algorithms

Neural online learning library

Participant : Alexander GEPPERTH [correspondant] .

nnLib is a C/Python-based library for the efficient simulation of neural online learning algorithms. The core user API is implemented in Python as an object-oriented hierarchy, allowing the creation of neural network layers from configuration files in a completely opaque way, as well as the adaptation of multiple parameters at runtime. Available learning algorithms are: PCA (subspace rule and stochastic gradient ascent), sparse coding, self-organizing map, logistic regression and several variants of Hebbian learning (normalized, decaying, ...). nnLib is under development and will be made available to the public under the GPL in 2012.

RLPark - Reinforcement Learning Algorithms in JAVA

Participant : Thomas Degris [correspondant] .

RLPark is a reinforcement learning framework in Java. RLPark includes learning algorithms, state representations, reinforcement learning architectures, standard benchmark problems, communication interfaces for three robots, a framework for running experiments on clusters, and real-time visualization using Zephyr. More precisely, RLPark includes:

-

Online Learning Algorithms: Sarsa, Expected Sarsa, Q-Learning, Actor-Critic with normal distribution (continuous actions) and Boltzmann distribution (discrete action), average reward actor-critic, TD, TD(), GTD(), GQ(), TDC

-

State Representations: tile coding (with no hashing, hashing and hashing with mumur2), Linear Threshold Unit, observation history, feature normalization, radial basis functions

-

Interface with Robots: the Critterbot, iRobot Create, Nao

-

Benchmark Problems: mountain car, swing-up pendulum, random walk, continuous grid world

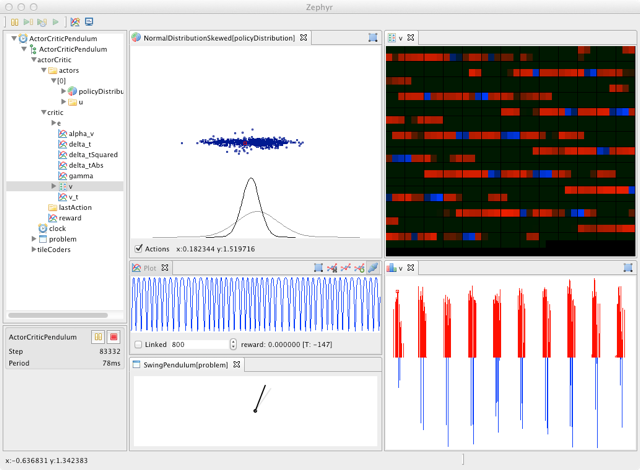

An example of RLpark running an online learning experiment on a reinforcement learning benchmark problem is shown in Figure 2 .

RLPark was started in spring 2009 in the RLAI group at the university of Alberta (Canada) when Thomas Degris was a postdoc in this group. RLPark is still actively used by RLAI. Collaborators and users include Adam White (patches for bug fixes, testing), Joseph Modayil (implementation of the NAO interface, patches for bug fixes, testing) and Patrick Pilarski (testing) from the University of Alberta. RLPark has also been used by Richard Sutton, a professor and iCORE chair in the department of computing science at the University of Alberta, for a demo in his invited talk Learning from Data at the Neural Information Processing Systems (NIPS) 2011. Future developments include the implementation of additional algorithms (the Dyna architecture, back propagation in neural networks, ...) as well as optimizations of vector operations using GPU (with OpenCL) and additional demos. Future dissemination includes a paper in preparation for the JMLR Machine Learning Open Source Software. Documentation and tutorials are included on the http://thomasdegris.github.com/rlpark/ RLPark web site. RLPark is licensed under the open source Eclipse Public License.

|

Autonomous or Guided Explorer (AGE)

Participant : Sao Mai NGUYEN [correspondant] .

The "Autonomous or Guided Explorer" program is designed for the systematic evaluation and comparison of different exploration mechanisms allowing a simulated or a real robot to learn and build models by self-exploration or social learning. Its conception allows an easy selection of different intrinsically motivated exploration or classical social learning mechanisms. Are provided algorithms such as Random Exploration, SAGG-RIAC, SGIM-D, imitation learning, learning by Observation. The program uses the new objet-oriented programming capability of Matlab, to enhance flexibility and modularity. The main program is built around objects that represent the different modules and the general architecture of such learning algorithms: action space exploration, goal space exploration, interaction with a human, robot control, model computation, but also evaluation and visualisation modules.

The software is designed to easily tune learning parameters and to be easily plugged to other robotic setups. Its object-oriented structure allows safe adaptation to different robotic setups, learning tasks where the structure of the model to learn differs, but also different action or goal spaces. This program is used by Sao Mai Nguyen of the team to compare the performance of different learning algorithms.These results were partly published in [27] . Future work will take advantage of its flexibility and implement new default robotic setups, robot control, action and goal spaces, and most of all, new types of interaction with a human.

NMF Python implementation

Participant : Olivier Mangin [correspondant] .

This library is meant to implement various algorithms for Nonnegative Matrix Facorization in the Python programming language, on top of the Numpy and Scipy scientific libraries.

Some Pyhton NMF libraries already exist, such as the one present in the scikit-learn project. However most of them are quite limited in comparison to recent advances in these techniques (for example extension of NMF algorithms to wider families of penalties such as the beta-divergence family). On the other hand existing MATLAB software has been released by the authors of some of these algorithms but, first, code is not available for every interesting algorithm and none of those various pieces of code implements the whole set of features that one would like to use.

This project is in a very early stage and yet only for internal use in the team. It could, however be released in the future, for example integrated in the previously mentioned scikit-learn project.