Section: New Results

Evaluation of an IEC Framework for Guided Visual Search

Participants : Nadia Boukhelifa [correspondant] , Anastasia Bezerianos, Waldo Cancino, Evelyne Lutton.

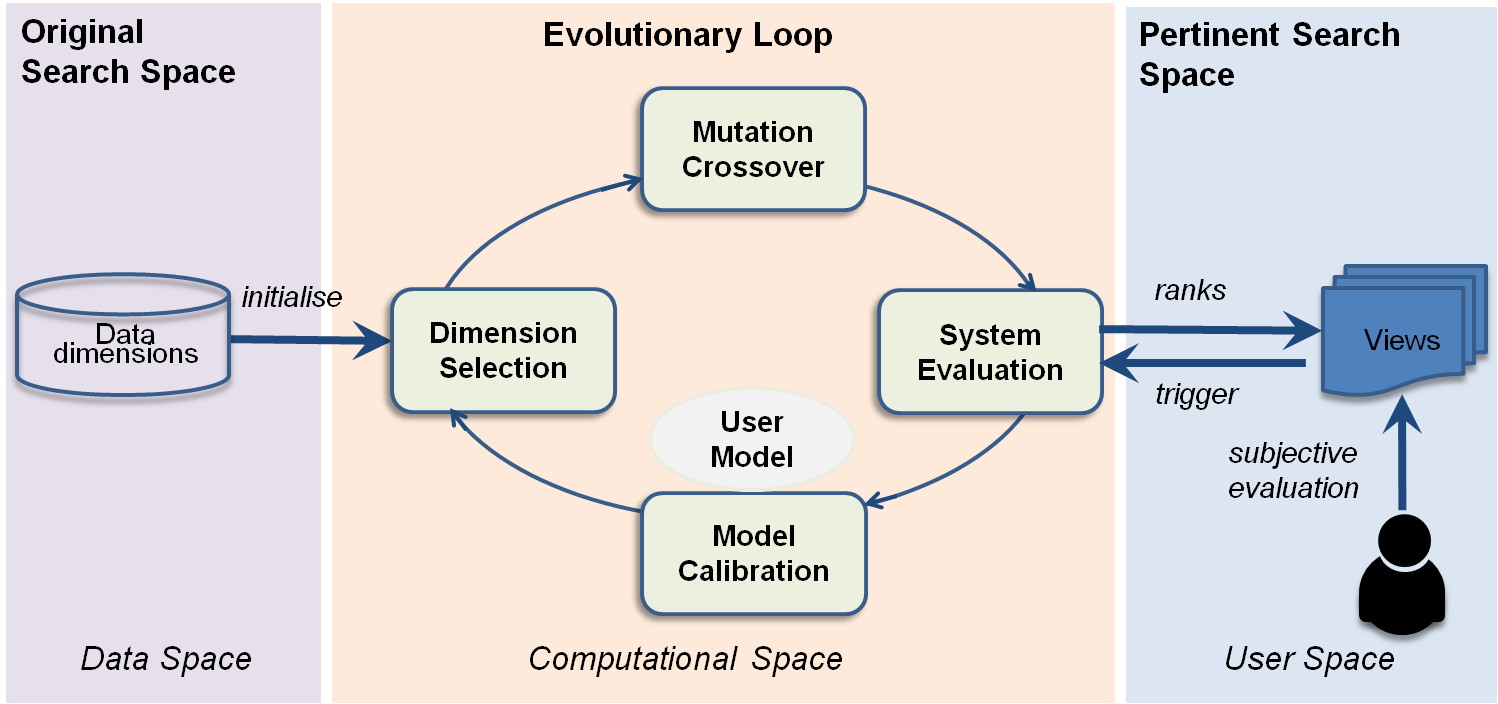

We evaluated and analysed a framework for Evolutionary Visual Exploration (EVE) [14] (Figure 10 ) that guides users in exploring large search spaces. EVE uses an interactive evolutionary algorithm to steer the exploration of multidimensional datasets towards two dimensional projections that are interesting to the analyst. This method smoothly combines automatically calculated metrics and user input in order to propose pertinent views to the user. We revisited this framework and a prototype application that was developed as a demonstrator, and summarized our previous study with domain experts and its main findings. We then reported on results from a new user study with a clear predefined task that examined how users leveragde the system and how the system evolved to match their needs.

While previously we showed that using EVE, domain experts were able to formulate interesting hypotheses and reach new insights when exploring freely, the new findings indicated that users, guided by the interactive evolutionary algorithm, were able to converge quickly to an interesting view of their data when a clear task was specified. We provided a detailed analysis of how users interact with an evolutionary algorithm and how the system responded to their exploration strategies and evaluation patterns. This line of work aims at building a bridge between the domains of visual analytics and interactive evolution. The benefits are numerous, in particular for evaluating Interactive Evolutionary Computation (IEC) techniques based on user study methodologies.

Next, we summarized and reflected upon our experience in evaluating our guided exploratory visualization system [35] . This system guided users in their exploration of multidimensional datasets to pertinent views of their data, where the notion of pertinence is defined by automatic indicators, such as the amount of visual patterns in the view, and subjective user feedback obtained during their interaction with the tool. To evaluate this type of system, we argued for deploying a collection of validation methods that are: user-centered, observing the utility and effectiveness of the system for the end-user; and algorithm-centered, analysing the computational behaviour of the system. We reported on observations and lessons learnt from working with expert users both for the design and the evaluation of our system.

More on the project Web page: http://www.aviz.fr/EVE http://www.aviz.fr/EVE.