Section: New Results

Gestures and Tangibles

|

Custom-made Tangible Interfaces with TouchTokens

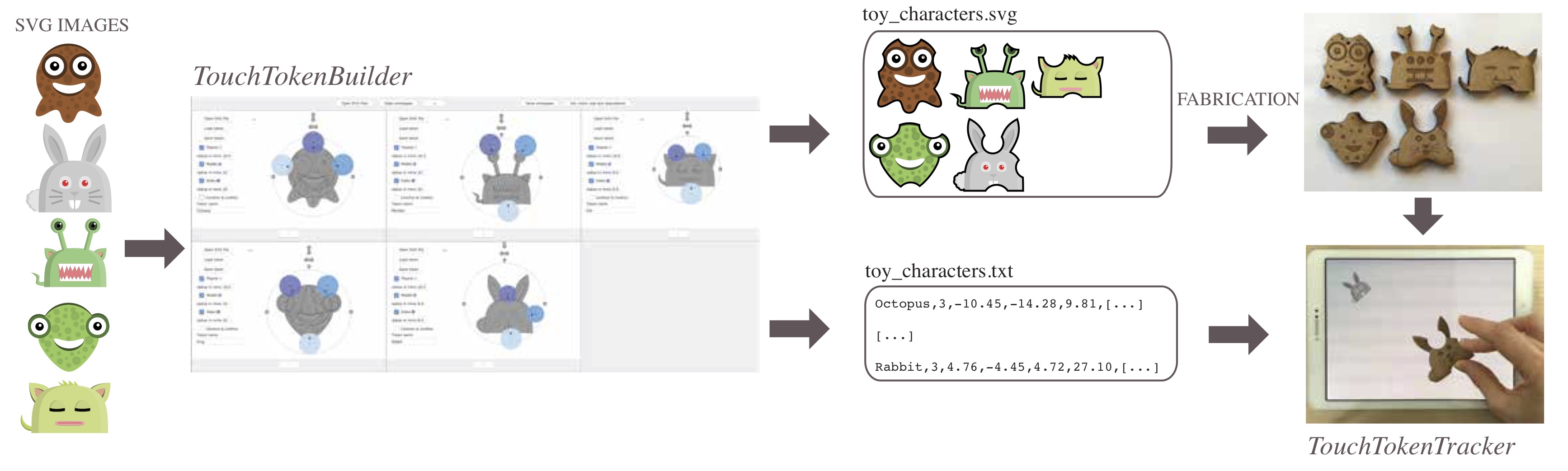

One of our main results in this area is the design, development and evaluation of TouchTokens, a new way of prototyping and implementing low-cost tangible interfaces [6]. The approach requires only passive tokens and a regular multi-touch surface. The tokens constrain users' grasp, and thus, the relative spatial configuration of fingers on the surface, theoretically making it possible to design algorithms that can recognize the resulting touch patterns. Our latest project on TouchTokens [17] has been about tailoring tokens, going beyond the limited set of geometrical shapes studied in [6], as illustrated in Figure 4.

|

Designing Coherent Gesture Sets for Multi-scale Navigation on Tabletops

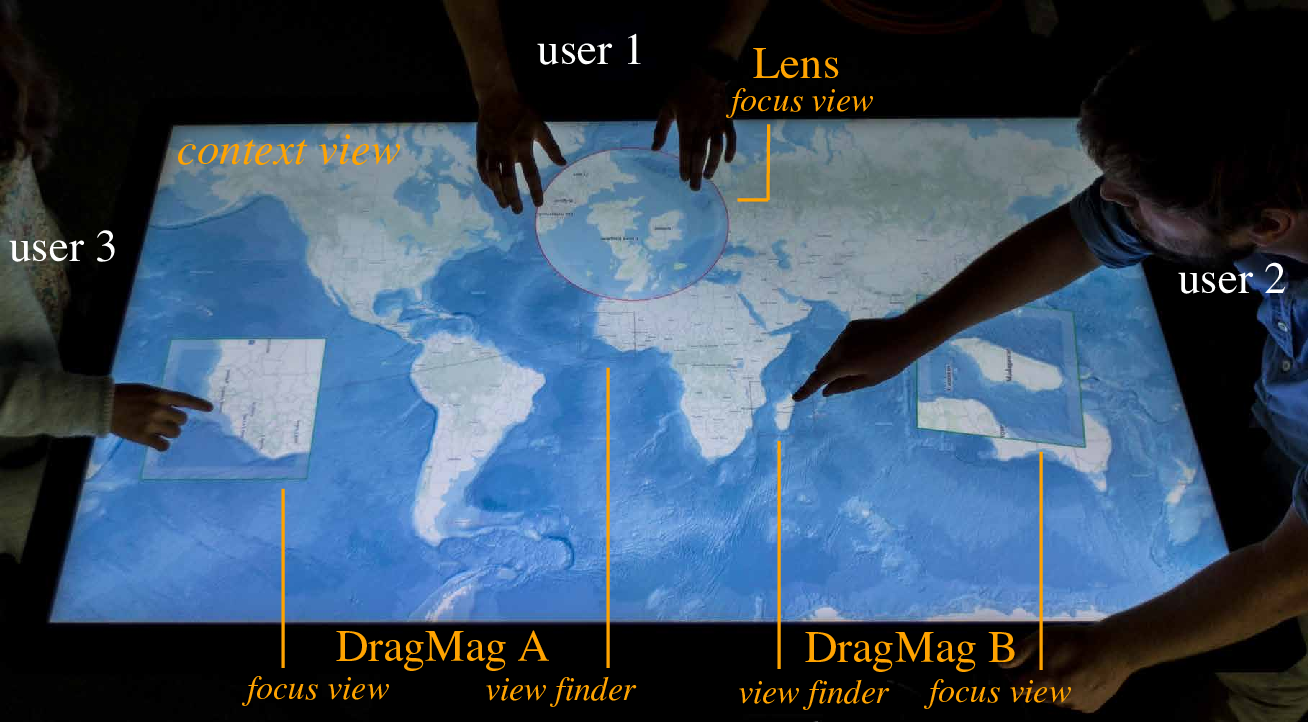

We designed a framework for the study of multi-scale navigation (Figure 5) and conducted a controlled experiment of multi-scale navigation on tabletops [25]. We first conducted a guessability study in which we elicited user-defined gestures for triggering a coherent set of navigation actions, and then proposed two interface designs that combine the now-ubiquitous slide, pinch and turn gestures with either two-hand variations on these gestures, or with widgets. In a comparative study, we observed that users can easily learn both designs, and that the gesture-based, visually-minimalist design is a viable option, that saves display space for other controls.

Command Memorization, Gestures and other Triggering Methods

In collaboration with Telecom ParisTech, we studied the impact of semantic aids on command memorization when using either on-body interaction or directional gestures [21]. Previous studies had shown that spatial memory and semantic aids can help users learn and remember gestural commands. Using the body as a support to combine both dimensions had therefore been proposed, but no formal evaluations had been reported. We compared, with or without semantic aids, a new on-body interaction technique (BodyLoci) to mid-air Marking menus in a virtual reality context, considering three levels of semantic aids: no aid, story-making, and story-making with background images.

As part of the same collaboration, we also studied how memorizing positions or directions affects gesture learning for command selection. Many selection techniques either rely on directional gestures (e.g. Marking menus) or pointing gestures using a spatially-stable arrangement of items (e.g. FastTap). Both types of techniques are known to leverage memorization, but not necessarily for the same reasons. We investigated whether using directions or positions affects gesture learning [20].