Section: New Software and Platforms

Software Platforms

Meka robot plateform enhancement and maintenance

Participants : Antoine Hoarau, Freek Stulp, David Filliat.

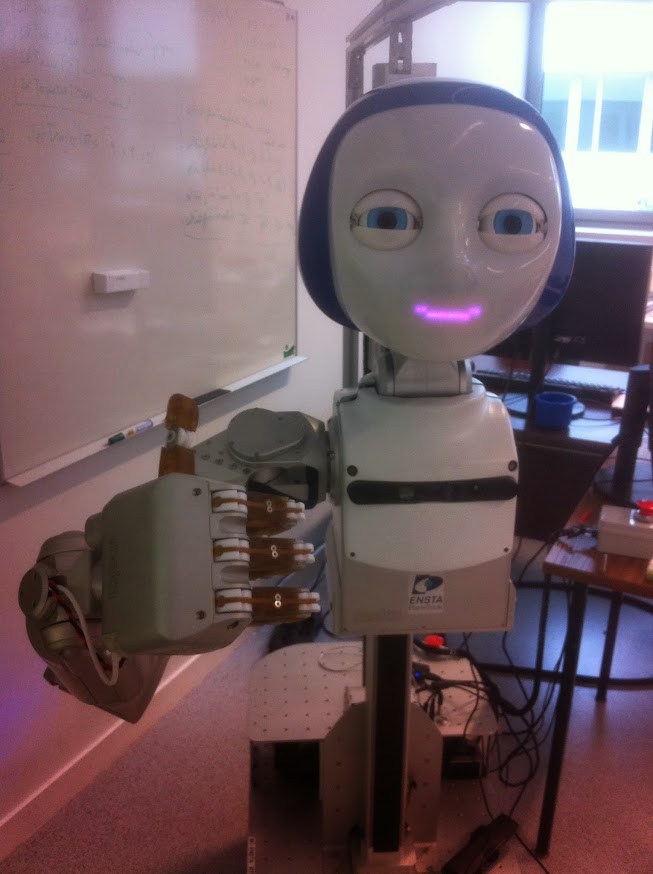

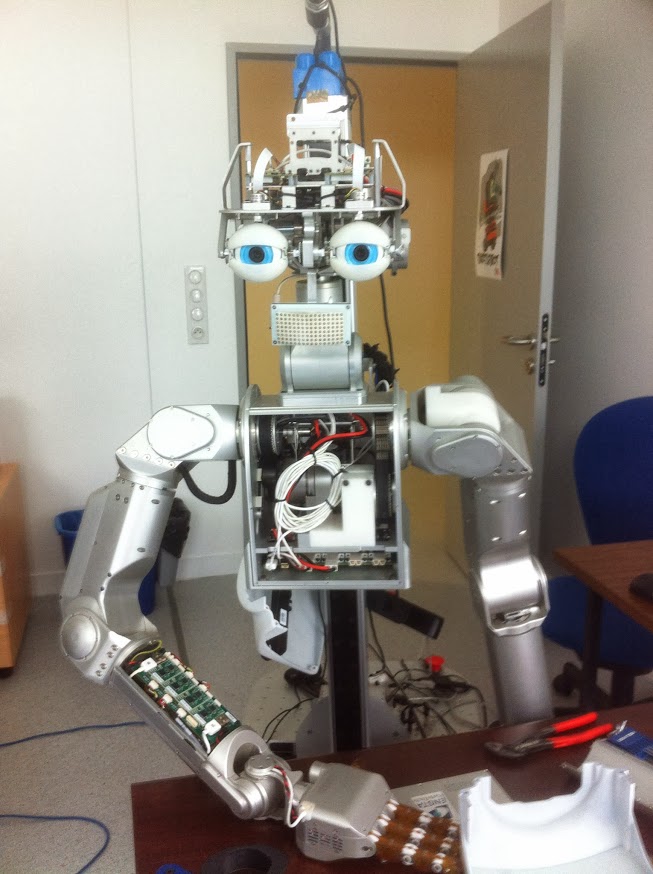

Autonomous human-centered robots, for instance robots that assist people with disabilities, must be able to physically manipulate their environment. There is therefore a strong interest within the FLOWERS team to apply the developmental approach to robotics in particular to the acquisition of sophisticated skills for manipulation and perception. ENSTA-ParisTech has recently acquired a Meka (cf. 7 ) humanoid robot dedicated to human-robot interaction, and which is perfectly fitted to this research. The goal of this project is to install state-of-the-art software architecture and libraries for perception and control on the Meka robot, so that this robot can be jointly used by FLOWERS and ENSTA. In particular, we want to provide the robot with an initial set of manipulation skills.

The goal is to develop a set of demos, which demonstrate the capabilities of the Meka, and provide a basis on which researchers can start their experiments.

The platform is evolving as the sofware (Ubuntu, ROS, our code) is constantly updated and requires some maintenance so less is needed for later. A few demos were added, as the hand shaking demo, in which the robot detects people via kinect and initiates a hand shake with facial expressions. This demo has been used to setup a bigger human robot interaction experiment, currently tested on subjects at Ensta (cf. 8 ). Finally, we've seen that the robot itself also needs some maintenance; some components broke (a finger tendon), a welding got cold (in the arm) and a few cables experienced fatigue (led matrix and cameras) (cf. 9 ).

Teaching concepts to the Meka robot

Participants : Fabio Pardo [Correspondant] , Olivier Mangin, Anna-Lisa Vollmer, Yuxin Chen, David Filliat.

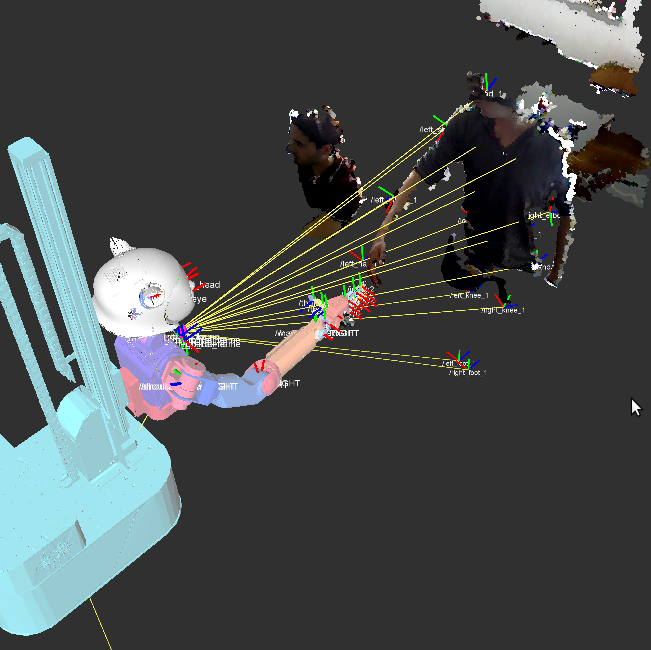

This plateform was developped during Fabio Pardo's internship, in the dual context of the study of Anna-Lisa Vollmer's research on human robot interaction protocole during a learning task, and Olivier Mangin's research on mechanism for word learning and multimodal concept acquisition. The plateform is centered around an interaction zone where objects are presented to a Meka robot augmented with a kinect camera placed on top of the interaction zone. Several colorful objects are available to be presented and described to the robot. Several object may be present at the same time on the table. Typical object are easily caracterized by their colors and shapes, such as the red ball, the yellow cup, or the blue wagon with red wheels.

The robot software is capable of abstracting the visual and acoustic perception in the following way. The camera image is segmented into objects; from each object, a set of descriptors is extracted, typically SIFT or shape descriptors and color histograms. An incremental clustering algorithm transforms the continuous descriptors into a histogram of descrete visual descriptors that is provided to the learning algorithm. The acoustic stream is segmented into sentences by a silence detection process and each sentence is fed to Google's text to speach API. Finally each sentence is represented as a histogram of the words recognized in the sentence.

The robot is capable of learning multimodal concepts, spanning words and visual concepts, through the nonnegative matrix factorization framework introduced by Olivier Mangin (see ). In addition several behaviors are programmed in the robot such as gaze following objects or understanding a few interaction questions.

The framework is illustrated on the following video https://www.youtube.com/watch?v=Ym5aYfzoQX8 . It enables to modify the interaction as well as the learning mechanisms in order to study the interaction between the teacher and the learning robot.

Experiment platform for multiparameters simulations

Participants : Fabien Benureau, Paul Fudal.

Simulations in robotics have many shortcommings. At the same time, they offer high customizability, rapidity of deployment, abscence of failure, consistency across time and scalability. In the context of the PhD work of Fabien Benureau, it was decided to investigate hypothesis first in simulation before moving to real hardware. In order to be able to test a high number of different hypothesis, we developped a software platform that would scale to the computing ressource available.

We designed simple continuous simulations around a of-the-shelf 2D physic engine and wrote a highly modular platform that would automatically deploy experiments on cluster environments, with proper handling of dependencies; our work investigate transfer learning, and some experiments's input data is dependent of the results of another.

So far, this platform and the university cluster has allowed to conduct thousands of simulations in parallel, totaling more than 10 years of simulation time. It has led us to present many diverse experiments in our published work [34] , each repeated numerous times. It has allowed us to conduct a multi-parameter analysis on the setup, which led to new insights, which are being presented in a journal article to be submitted in the beginning of this year.

Because of its high modularity, this platform is proving to be highly flexible. We are currently adaptating it to a modified, cluster-ready, version of the V-REP simulator. Those simulations will serve to back ones on similar real-world hardware that are currently setup.

pypot

Participants : Pierre Rouanet [correspondant] , Steve N'Guyen, Matthieu Lapeyre.

Pypot is a framework developed to make it easy and fast to control custom robots based on dynamixel motors. This framework provides different levels of abstraction corresponding to different types of use. More precisely, you can use pypot to:

-

directly control robotis motors through a USB2serial device,

-

define the structure of your particular robot and control it through high-level commands,

-

define primitives and easily combine them to create complex behavior.

Pypot has been entirely written in Python to allow for fast development, easy deployment and quick scripting by non-necessary expert developers. It can also benefits from the scientific and machine learning libraries existing in Python. The serial communication is handled through the standard library and thus allows for rather high performance (10ms sensorimotor loop). It is crossed-platform and has been tested on Linux, Windows and Mac OS.

Pypot is also compatible with the V-REP simulator (http://www.coppeliarobotics.com ). This allows the transparent switch from a real robot to its simulated equivalent without having to modify the code.

Pypot also defined a REST API permitting the development of web apps such as a web control interface faciliting the use of a robotic platform.

Pypot is part of the Poppy project (http://www.poppy-project.org ) and has been released under an open source license GPL V3. More details are available on pypot website: https://github.com/poppy-project/pypot